AI - Australia

Article II:

Syllabus Areas:

GS II - Governance

On October 27, Australia took a decisive stance in

the global debate

over artificial intelligence and copyright law.

Attorney

General Michelle Rowland firmly rejected proposals that sought to grant

technology companies unrestricted rights to mine copyrighted material

for AI training.

This move places Australia among the few democracies

willing to draw ethical boundaries around AI development, asserting

that creators must retain control over their work and share in the value it

generates.

The Core of the Issue

At the centre of this controversy lies a fundamental question

—

Should AI companies be allowed to use copyrighted works such as books,

music, films, and journalism to train their models without explicit permission or

compensation to creators?

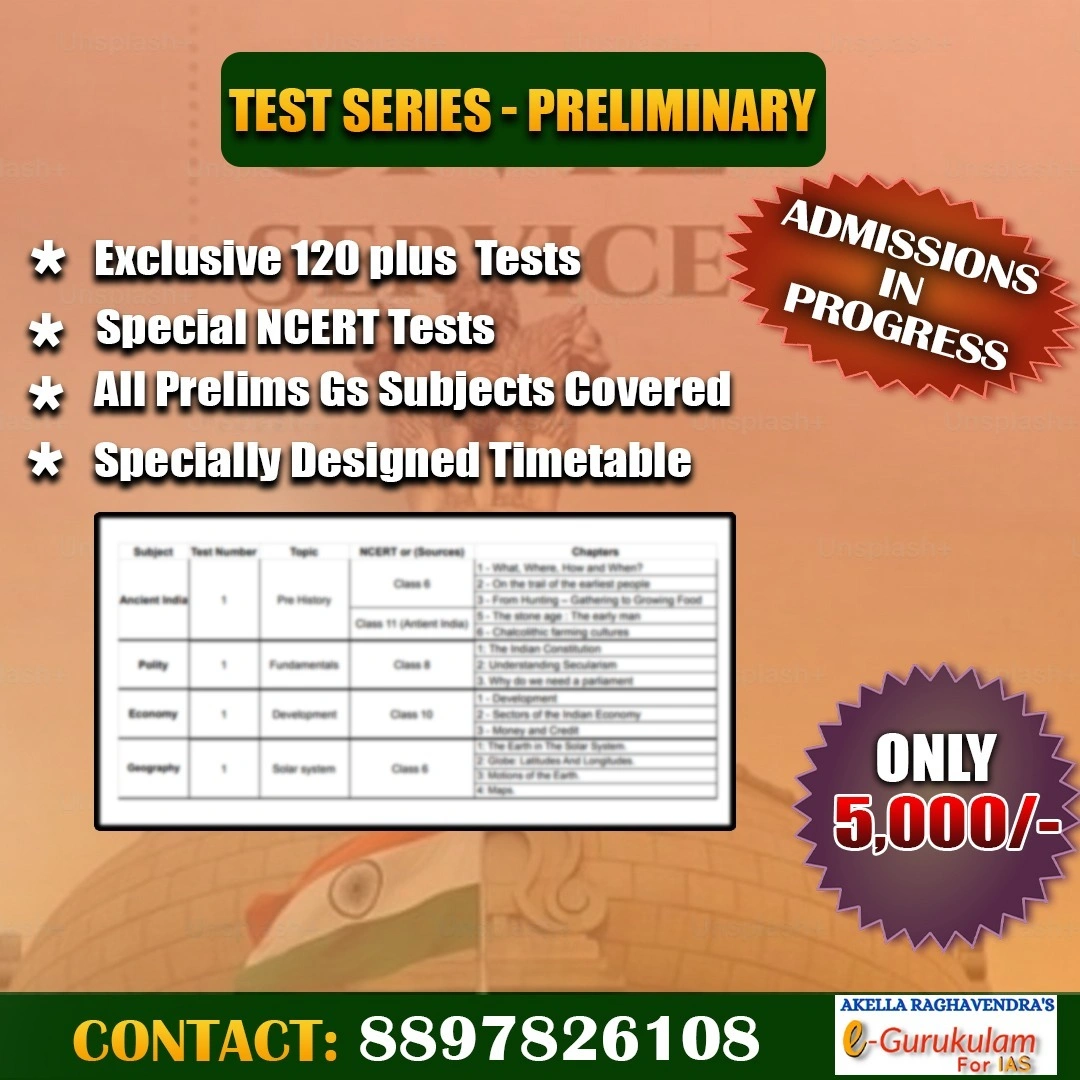

The debate intensified when Australia’s Productivity Commission, an independent government-backed body, proposed an exemption under copyright law to allow text and data mining (TDM) for AI development.

In its report, Harnessing Data and Digital Technology, the Commission argued that loosening restrictions would:

- Encourage foreign investment,

- Unlock billions in economic potential, and

- Position Australia as a global AI innovation hub.

However, the report was heavily criticised for:

- Lack of consultation with artists and media houses,

- Failing to assess the impact on the creative economy, and

- Appearing to favour corporate interests over cultural preservation.

Government’s Response: Balancing Innovation and Rights

Attorney General Michelle Rowland rejected the proposal, reaffirming that technological progress cannot come at the cost of creative integrity.

She noted that:

“Australian creatives are not only world class, but they are the lifeblood of our culture. We must ensure the right legal protections are in place.”

Recognising AI’s potential to transform industries, the government nevertheless insisted that creators must benefit from the digital revolution.

To chart a balanced path forward, the government established a Copyright and AI Reference Group (CAIRG) tasked with:

- Exploring a new paid licensing framework under the Copyright Act,

- Ensuring fair compensation when copyrighted works are used for AI training, and

- Designing a system that respects creators’ consent while

supporting technological innovation.

This approach aims to move from a voluntary model to a mandatory value-sharing system, aligning creative rights with digital-era realities.

Reaction from the Creative and Media Industries

Australia’s decision was hailed as a major

victory for the

arts, media, and cultural sectors.

Many see it as a

defining moment for creative sovereignty in an age where AI threatens

to blur the lines between originality and imitation.

Annabelle Herd, CEO of the Australian Recording Industry Association (ARIA), welcomed the decision, calling it:

“A win for creativity, Australian culture, and common sense.”

She stressed that existing copyright licensing systems are the backbone of both the creative and digital economies, ensuring that innovation continues to thrive on fair compensation and respect for intellectual property.

Industry leaders echoed similar sentiments:

- The ruling reaffirms that creators have agency over how their works are used.

- It protects the economic and cultural integrity of Australia’s creative sector.

- It positions Australia as a global advocate for creator rights at a time when many democracies are struggling to regulate AI’s reach.

Why the Decision Matters Now

As AI becomes increasingly capable of replicating artistic styles, voices, and journalistic formats, the boundaries between inspiration and appropriation grow dangerously thin.

Key Concerns:

- Loss of creative control: Artists fear their works may be absorbed into AI systems without recognition.

- Economic harm: Royalties and licensing revenues risk being replaced by automated reproductions.

- Cultural dilution: Unchecked data mining could erode authenticity and local creative identity.

- Vulnerability of small creators: Independent artists, writers, and musicians often lack the legal or financial means to defend their rights.

In this context, Australia’s decision sends a powerful global message — that human creativity is not raw material for machines, and that innovation must coexist with respect for creators and culture.

Broader Global Implications

Australia’s stance may influence how other democracies shape

their AI

copyright frameworks.

While tech giants lobby for open data

access, Australia’s example emphasises ethical AI development

built on consent, compensation, and cultural respect.

The next phase—potentially introducing a mandatory licensing system—could become a template for global AI governance, ensuring that:

- Creators receive royalties for AI use of their work,

- AI innovation remains accountable and transparent, and

- The creative economy continues to flourish alongside digital progress.

Australia’s ruling redefines what responsible innovation looks

like.

By

standing firm against unchecked AI data mining, the government has affirmed a principle

that resonates far beyond its borders: technology should serve creativity, not

exploit it.

As nations navigate the AI revolution, Australia’s example

underscores a crucial

truth — progress must be rooted in fairness, respect, and cultural

integrity.

Protecting artists’ rights is not

anti-innovation; it is the foundation of a sustainable and ethical digital

future.

Prelims Questions:

1. The government of Australia recently ruled out introducing a “text and data mining” (TDM) exception to its copyright law for AI training. Which of the the following statements about this decision is/are correct?

- It means that technology companies in Australia cannot use works by Australian creators to train AI without permission.

- It implies that all AI training on copyrighted works is now banned in Australia.

- The decision underlines that Australian creators must be fairly compensated when their work is used in AI training.

Select the correct answer:

2. The Australian government has convened the “Copyright and AI Reference Group” (CAIRG). Among the following, which are key priority areas for this group?

- Examining fair/legal avenues for using copyright materials in AI.

- Clarifying how copyright law applies to works generated by AI systems.

- Introducing a blanket exemption for AI firms to use copyrighted works for free.